UX&UI / 2020

Designing a Better User Experience for Network Elements Management Systems through a Simulation-based Design Method

Responsibilities: User Research, User Flow Design, UI Design & UX Evaluation

The Challenge

My role

Brought in as a lead UX designer to iterate SystemHub, I conducted a complete task analysis and user flow evaluation by adopting a simulation-based design method. I then refined the user flow and UI design.

#Think

Stakeholder Interviews

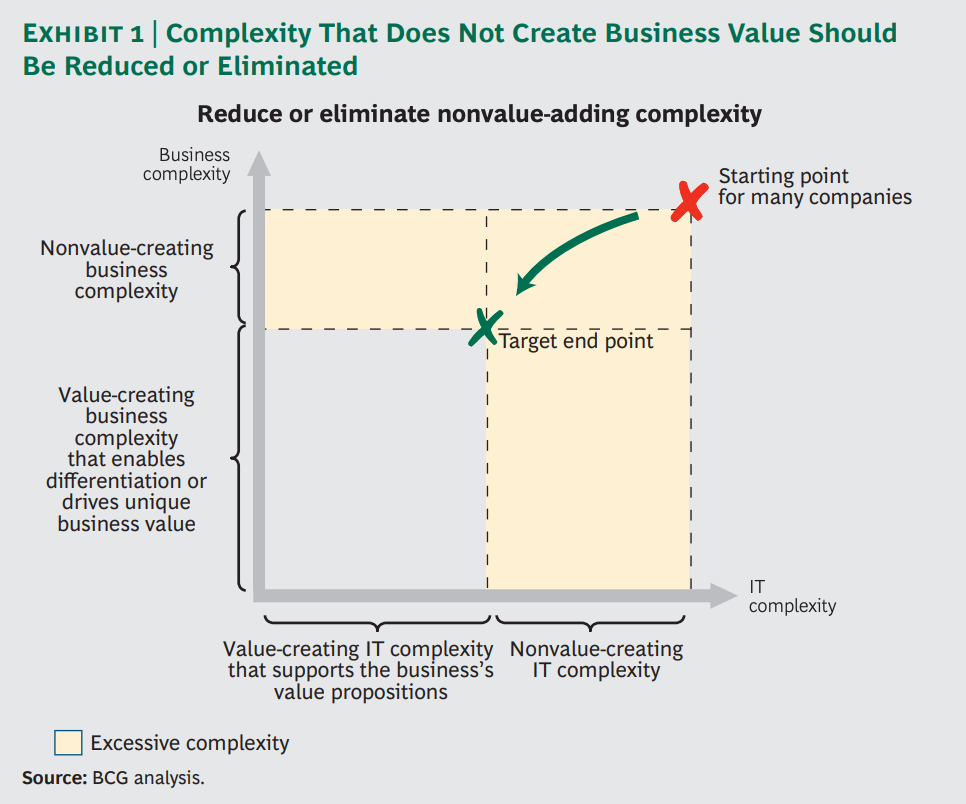

Research Guided by the Idea: Avoid Unnecessary Complexity

Simulation Testing

Steps where the system can’t know what to do, e.g. entering a parameter for the first time.

Navigating from one view to another (pages, tabs, windows).

Entering parameters and values that were already entered earlier.

Functions that don’t move the user towards the desired outcome in their use situation. e.g. changing item status in order to modify or use it.

Use Scenarios

One of the key features of SystemHub is the Lookup Service where system administrators “customize” (or “fine-tune”) specific parts (i.e., the values of certain workflow variables/parameters.) of a workflow in services without actually changing or creating new versions of the workflows. The Lookup Service GUI enables the system administrator to define and delete lookup tables as well as maintain existing lookup tables.

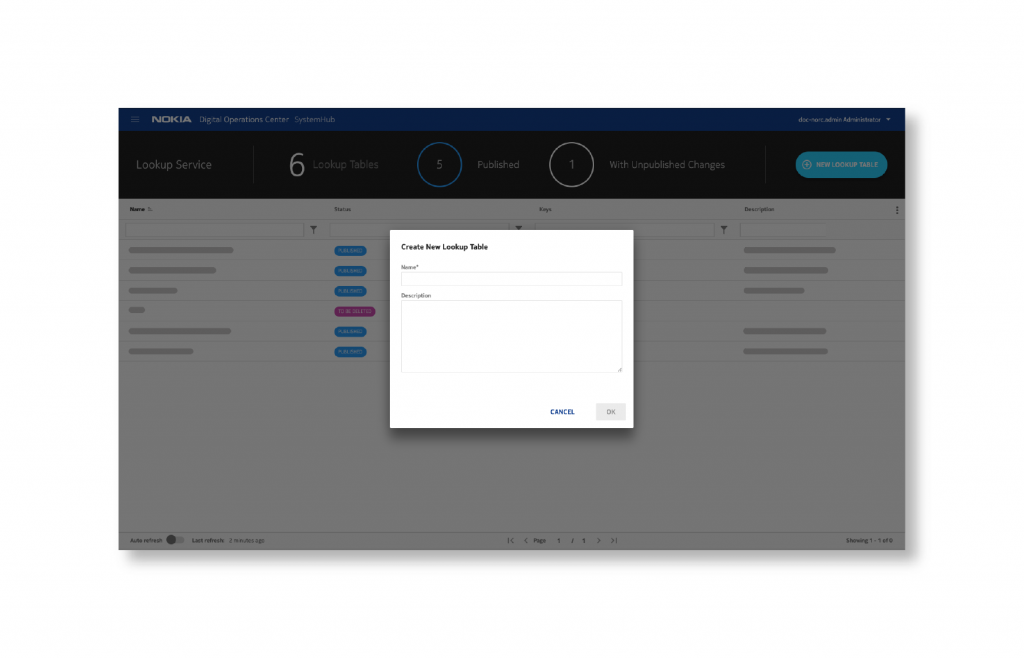

Use case 1: define a new lookup table

The users can create a new lookup table, including defining a name, description, other relevant parameters, etc.

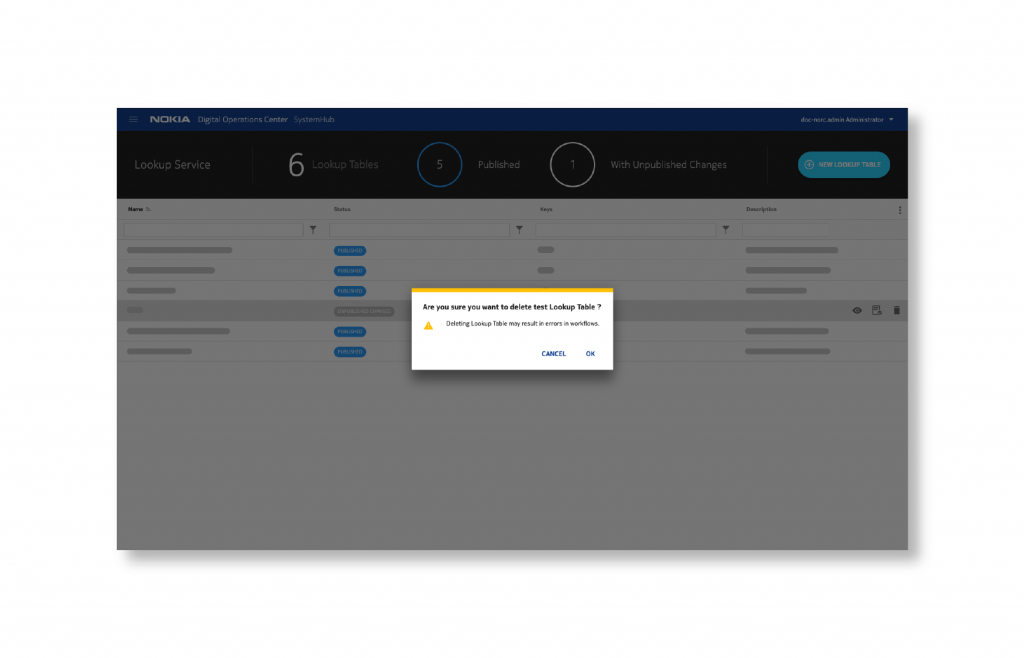

Use case 2: delete a lookup table

The users can delete a lookup table and all data within it. This may break many workflows. Hence the users are prominently warned and clear confirmations are asked from the user.

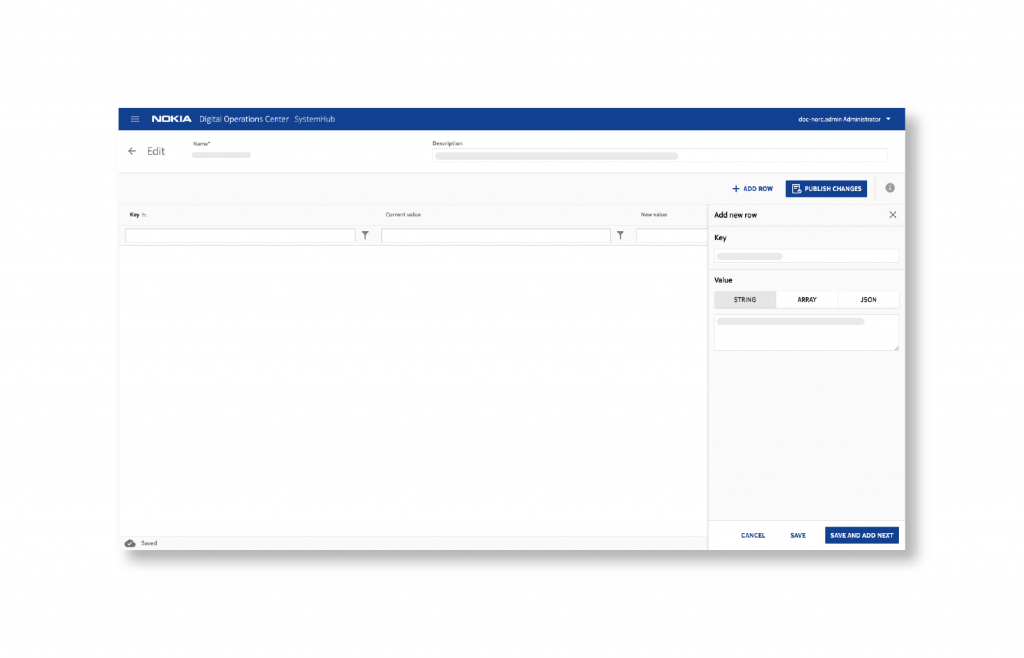

Use case 3: edit a lookup table

The users can edit the contents of a selected table. The UI will provide options for locating the desired data: find a specific key first and then edit a specific value.

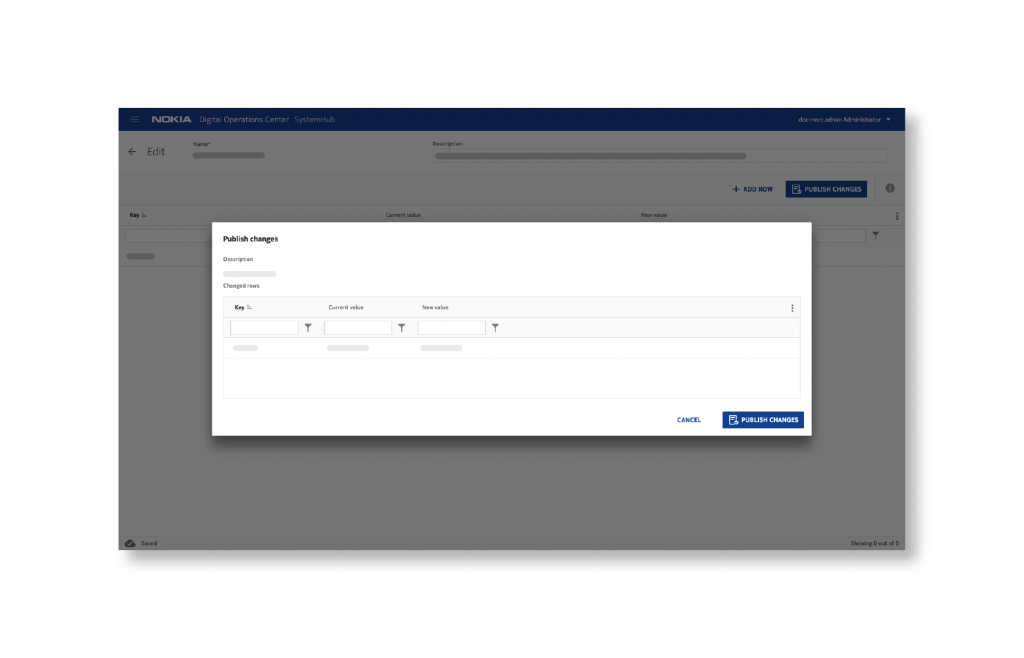

Use case 4: publish changes to a lookup table

The lookup table editor UI enables controlled deployment of changes made to a lookup table. The changes are “visible” only when the lookup Service editor user makes an explicit operation to publish the changes.

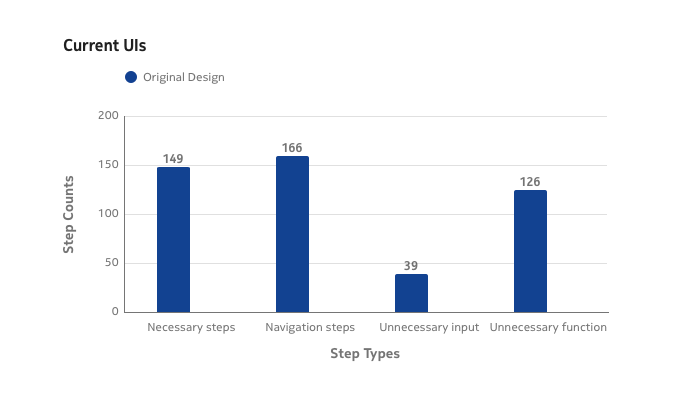

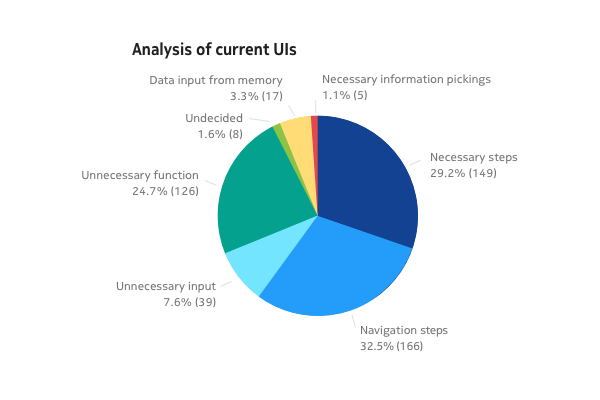

Simulation Test Results

After the simulation test, I found that necessary steps only took 29.2% of the whole task steps but 32.3% of the steps were unnecessary functions and input. 32.5% of the steps were navigation steps. Users were required to remember information in 3.3% of the steps.

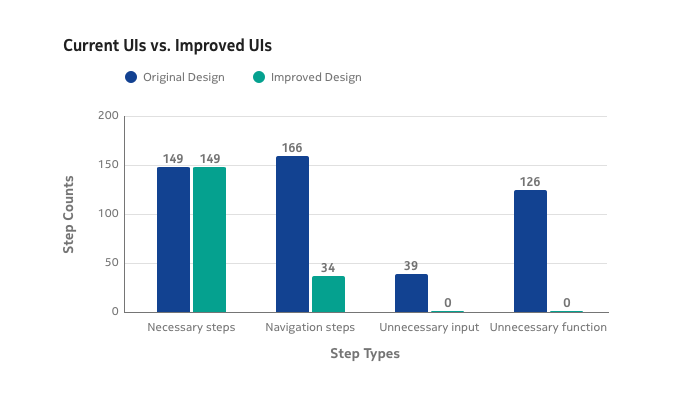

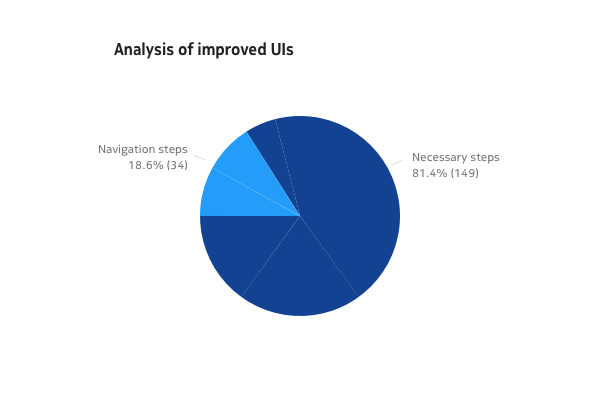

Design Improvement

An improved UI design was created relatively mechanically based on the analysis. Unnecessary inputs and functions were left out. Navigation was minimized by re-organizing the data and functions in the UI. The total amount of actions was greatly reduced. Besides, some new features were included to improve the error tolerance where human errors could be recovered.

How the research was compiled and shared

As fresh research and redesign were completed and used to jumpstart a new iteration, I placed the research findings into a kickoff slide deck that I would eventually present to key stakeholders and decision-makers. From this, we’d agree on the direction of the iteration, the timeline, and the team needed to carry it out. We didn’t iterate for the sake of iterating, so this was part of the process that helped us evaluate each iteration.

#Make

Mockups

As the design direction was established, I began creating the structure and hierarchy of the design, as well as the aesthetic treatments. Full user flows and unique interactions were prototyped for sharing internally and testing with users.

User Testing

User testing and session recordings were regularly run to observe organic user behaviors and check the total task time (from the first trigger until the result is reached). The total task time can be a direct objective measure of productivity. Theoretically, the overall efficiency will be increased to 2.8 times of what it had been before. But, surprisingly, users could manage the task 3.5 times faster. In total, the efficiency improvement is somewhere around 3.5x. The redesign did not include utility improvements. With those, the overall productivity improvement is likely to be in the range of 4x to 5x.

#Make

Impact

The redesigned scenarios-based SystemHub simulation sequence was used in the customer demo, and it received extremely positive feedback from the customers. The demo requested has increased to 35% from what it was in 2019.